Many of us have been around Wi-Fi for well over a decade now. In the beginning, we thought our job was to provide coverage. Kind of like the Verizon cell phone commercials with the guy continually repeating, “Can you hear me now?”. We assumed our job was to provide a radio signal at a certain minimum threshold to provide this invisible “coverage” thing to make client devices see the access point and transfer packets from the wireless network to the wired one.

We understood the way 802.11 clients and access points shared the

RF medium–we had studied the 802.11 protocols and kind of felt comfortable with the process. But somehow, in the pursuit of coverage, we forgot many of the specific techniques used by Wi-Fi to communicate.

As a group of wired network engineers and architects, we have collectively forgotten how to design and troubleshoot a “Hubbed” network. It has been at least 12 years since I purchased a managed Hub and placed it on a wired Ethernet network. We’ve had Switches for so long, we’ve forgotten about the entire Collision Domain issues we used to fight with back before the turn of the century.

(I’m guessing many reading this have never worked on an enterprise networks with brand new hubs…)

I was reminded of this very problem while doing some testing in a Large Public Venue lately. This LPV had a capacity of 18,000 or so. In the first pass of the WLAN design stage a vendor followed the design processes outlined by Andrew Von Nagy’s posts, presentation video and materials at #WLPC.

All the major vendors use this same type of technique when designing LPV networks.

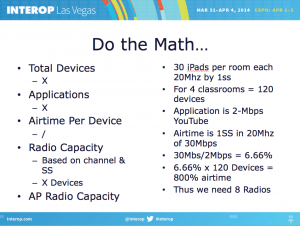

During Interop, Lee Badman and I presented the above slide in our WLAN Design session–basically using the same type of technique–all based on putting in certain variables for number of devices, type of applications, airtime per device, and capacity of radios to come up with a target figure for the number of access points needed in a specific situation.

The issue in using ONLY the calculation method, is that it is based on the premise of unlimited frequencies. It is an algorithm. It is merely doing mathematical calculations based on a series of assumptions. These are all fine and good. But this cannot predict if the aforementioned calculated designs will actually work in the real world.

In the real world, the methods and techniques defined in the 802.11 standards and protocols trump any calculation you might be using.

For example; in our last LPV test, we designed the site using the calculation method, with approximate estimations plugged in for a number of users, types of devices, types of applications, loading estimates, etc. All together netted a total number of access points to meet the estimated needs. We installed these and configured ready to go.

In subsequent testing, individual devices tested far above the initial requirements. 10, 20, 50, 100 devices were tested with fantastic results. Throughput numbers far exceeded design requirements. Surveys for coverage also showed fantastic RSSI in all location of the venue. All was ready to go. Even the wired back end was prepared–DHCP servers, blocking broadcasts storms, segmenting networks.

Then the attendees arrived. The first couple hundred devices on the network received maximum throughput up to the throttled per-device limit. Data rates were averaging above 36Mbps. All was right with the world.

As more people entered the venue, we closely watched the airtime, knowing if the number of time-slots for radio traffic got too low, like when airtime utilization exceeds 70% or so, we would be in for trouble. But the airtime stayed well below 35%.

But as more and more client devices joined the network, the overall throughput decreased, retry rates climbed, CRCs climbed up to 20%, 25%, 30% and higher. Then the average data rates dropped. From an average of above 36Mbps, the average soon dropped to below 10Mbps, over 60% of all data frames on the wireless LAN were now only being transmitted at 6Mbps data rate!

Though the airtime never averaged over 35% – the throughput dropped to the floor. From nearing the throttled 10Mbps throughput cap–to a measly <100Kbps!

The Entire time, the coverage was FANTASTIC! – with multiple -50dBm coverage in 5GHz everywhere. Yet somehow this wireless network was brought to its knees in a matter of minutes after attendance climbed.

One other data point to throw in the mix. From one location, on one end of the venue, about halfway up the seats–I could see 4,500 client devices probing in 5GHz. After walking in a circle the entire venue, twice, the device count only climbed to 5,500. So basically, from one location in the bowl, I could ‘see’ over 80% of client devices. Makes one go, hmmmm.

As a side note: In the example above the entire data set was in 5GHz, we totally ignored the 2.4GHz frequency because it was nearly useless before any attendees arrived with an overly high self-induced noise floor based on so many radios using the same three frequencies.

We can use the calculation method of WLAN design all we want. Estimating client counts, application types, Erlang functions, AP radio capacities… but in the real world it isn’t about coverage and mathematics.

In the real world, wireless networks will always be judged on the client experience. A client’s experience is dictated by data throughput. Data throughput is all about frequency reuse.

Each frequency has a limited capacity–when we reach that capacity – the network can’t take any more, and throughput actually decreases. This isn’t caused only by saturation of airtime. (Though you can tell in our Wi-Fi Stress Test that when the RF was saturated, the throughput stopped)

The physical properties of an area can also cause loss of throughput. In an area where the client and AP density is so high, they can interfere with each other’s traffic:

– this traffic can cause CRCs

– these in turn cause retries

– with the increase in retries at a given data rate

– the client algorithms downgrade the data rate

– thus each taking more airtime

– thus increasing the chances of more CRCs

– and the disastrous cycle continues.

Thus, we return to the title of this post–It is all about frequency reuse!

Calculations shouldn’t drive our wireless designs – they have their place in getting ballpark estimates – but should be driven by our abilities to design in frequency reuse.

It is all about frequency reuse!